$64,944 to support 25,000 customers in August — A full breakdown of Kit's AWS bill

At Kit our core values include Teach everything you know and Work in public. We already share our financials so beginning with this post, another step we’re taking to fulfill these values is to share our AWS bill.

Overview

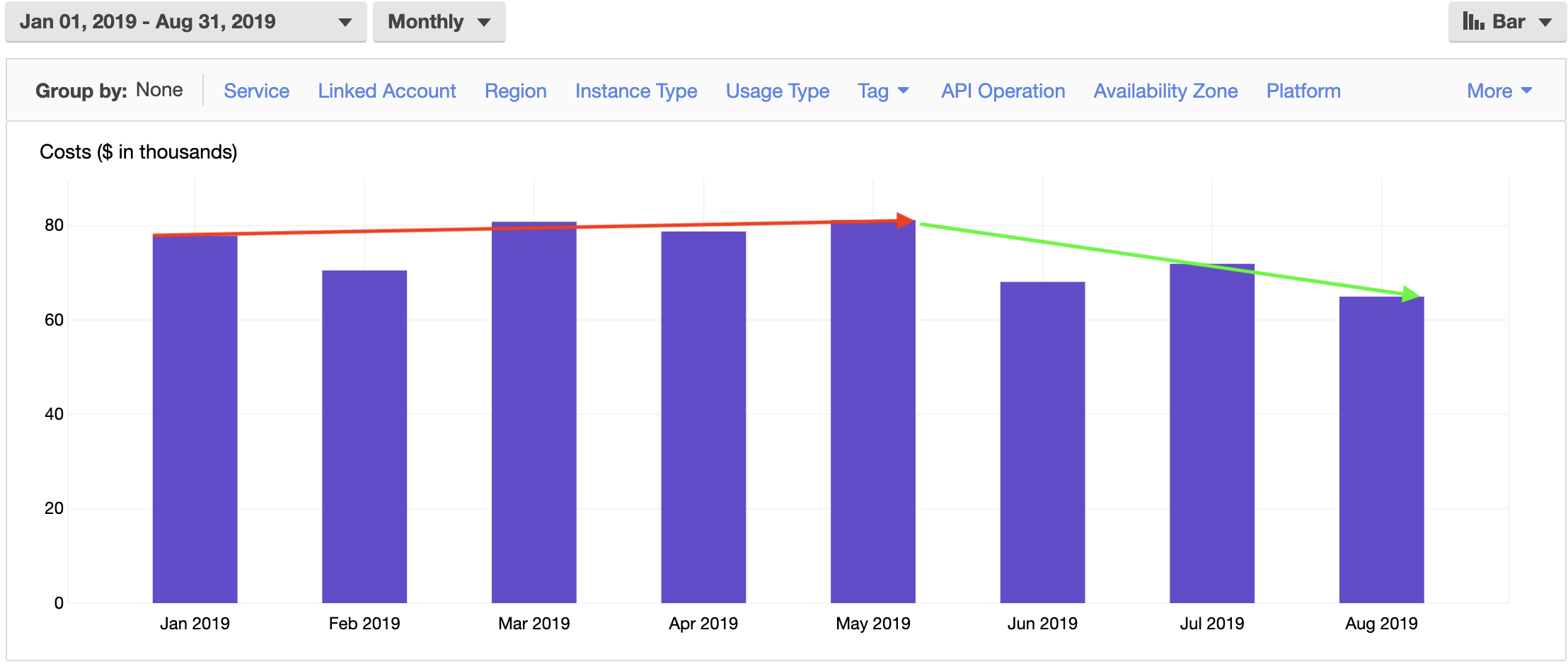

We spent $64,944.78 on AWS in August. This is down 10% from July and is 4.3% of MRR in August. This drop is because of the work we’ve done in June and July, improving our MySQL database. I mentioned it last month, but these improvements not only decrease our bill but increase our infrastructure’s overall efficiency.

High-level breakdown:

- EC2-Instances - $19,525.87 (0%)

- Relational Database Service - $19,020.12 (-27%)

- S3 - $11,107.75 (0%)

- EC2-Other - $5,026.44 (+3%)

- Support - $4,378.71 (-14%)

- Others - $5,885.88 (+7%)

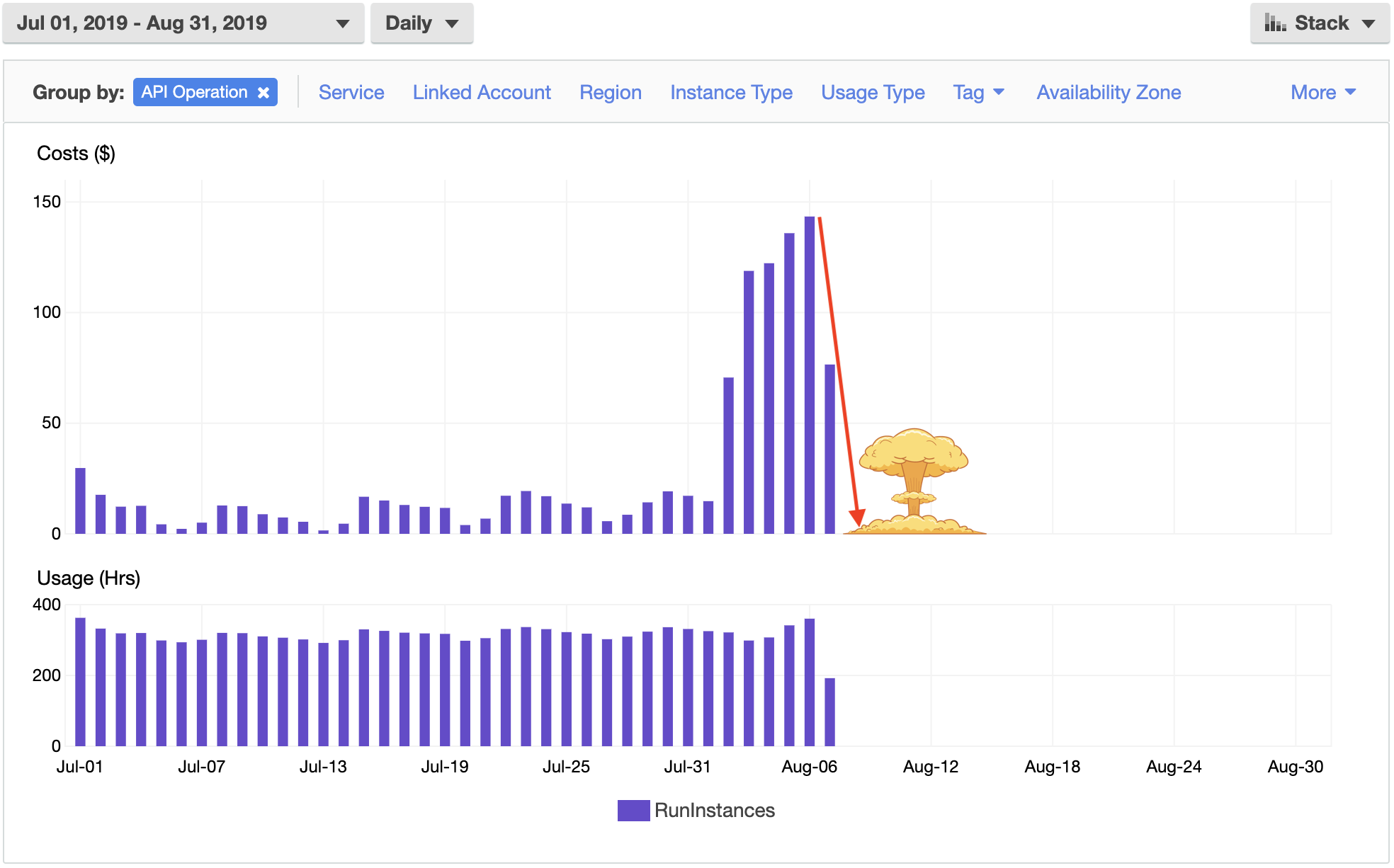

EC2-Instances - $19,525.87 (0%)

This is huge because we’re now spending more on our own managed servers than we are on our database. As far as I know, this is the first time this has happened ever. It’s also important to note that despite our database cost decreasing our EC2 costs have stayed flat between July and August. The other good news is that there is a fair amount of fat on this bill that we should be able to trim in the upcoming months, although it will take us some time. I expect this bill to increase before then because we’re working on some big projects.

Service breakdown

USE2-HeavyUsage:i3.2xlarge - $6,687.07 (0%)

- These are our Cassandra and Elasticsearch clusters

- We use Cassandra to store massive amounts of data

- We use Elasticsearch to search through massive amounts of data

- This bill is fixed because we have these instances reserved

- This bill is going to increase in the upcoming months as we add another datacenter to our Cassandra cluster and fully migrate to the ELK stack

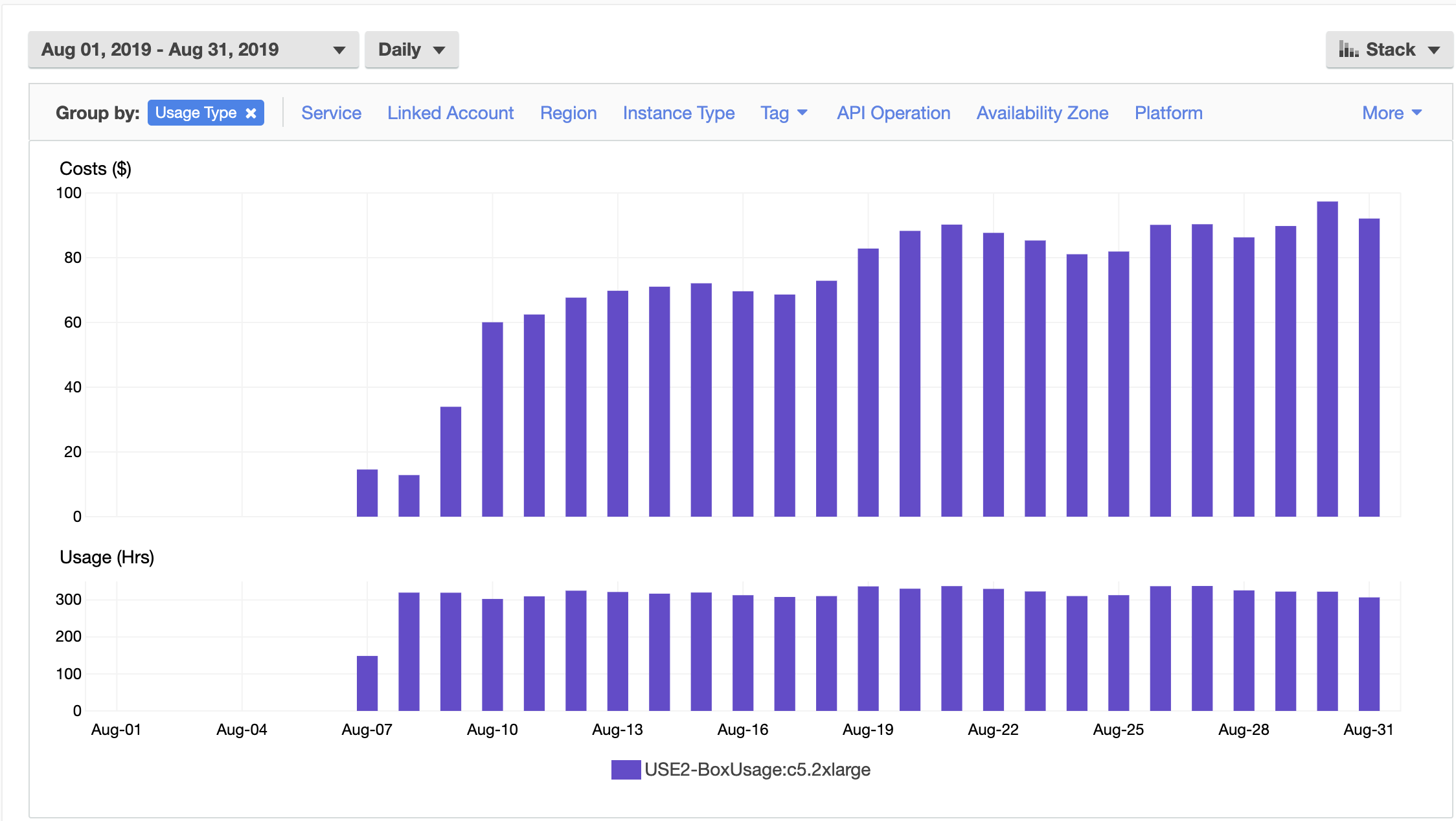

USE2-BoxUsage:c5.2xlarge - $1,818.91 (-)

- Our c4.2xlarge reservations ran out at the beginning of August, so we upgraded all of them to c5.2xlarge’s

- We use these for our web apps as well as our email sending workers

- These are compute-optimized instances which means they are meant to be used for work that requires a lot of CPU power

- This is on-demand pricing. We can save more money here by reserving more instances.

- We didn’t migrate to this instance type until a few days into August so we can expect this bill to increase until we reserve the instances

USE2-HeavyUsage:c5.2xlarge - $1,514.30 (-)

- These are the same as the above, except this is the reserved price.

- We have 12 of these instance types reserved right now and are likely to reserve more in the future

- We didn’t reserve these instances until August 7th so we should expect this bill to higher in September. There will be seven additional days of reservations we need to pay for After that, this bill will be fixed

USE2-DataTransfer-Out-Bytes - $1,317.49 (+22%)

- This is the cost of our apps to communicate with each other as well as the internet

- This is also affected by our backup strategies. We’re running normal backups as well as disaster recovery backups.

- The disaster recovery backups are new in July. August was the first month where we saw how much we, will typically spend on data transfer for a full month of disaster recovery data transfer

- This bill will increase in the future as we increase data transfer between regions with the secondary Cassandra cluster in a different region

USE2-BoxUsage:t3.medium - $1,293.15 (+25%)

- These are burstable instances. That means they are useful for workloads that experience intermittent bursts of CPU usage.

- We use these instances for many different workloads from email processing, to link tracking, to Elasticsearch indexing.

- Right now, we have about 50 of these instances running, and they are all using on-demand pricing. We can save some money here when we decide to reserve some of these instances.

- I don’t expect this cost to change too much because we’re sized appropriately. We are unlikely to need to increase our instance count for a while.

- If it does increase, it will only be because we decrease the count of a different, more expensive EC2 instance type.

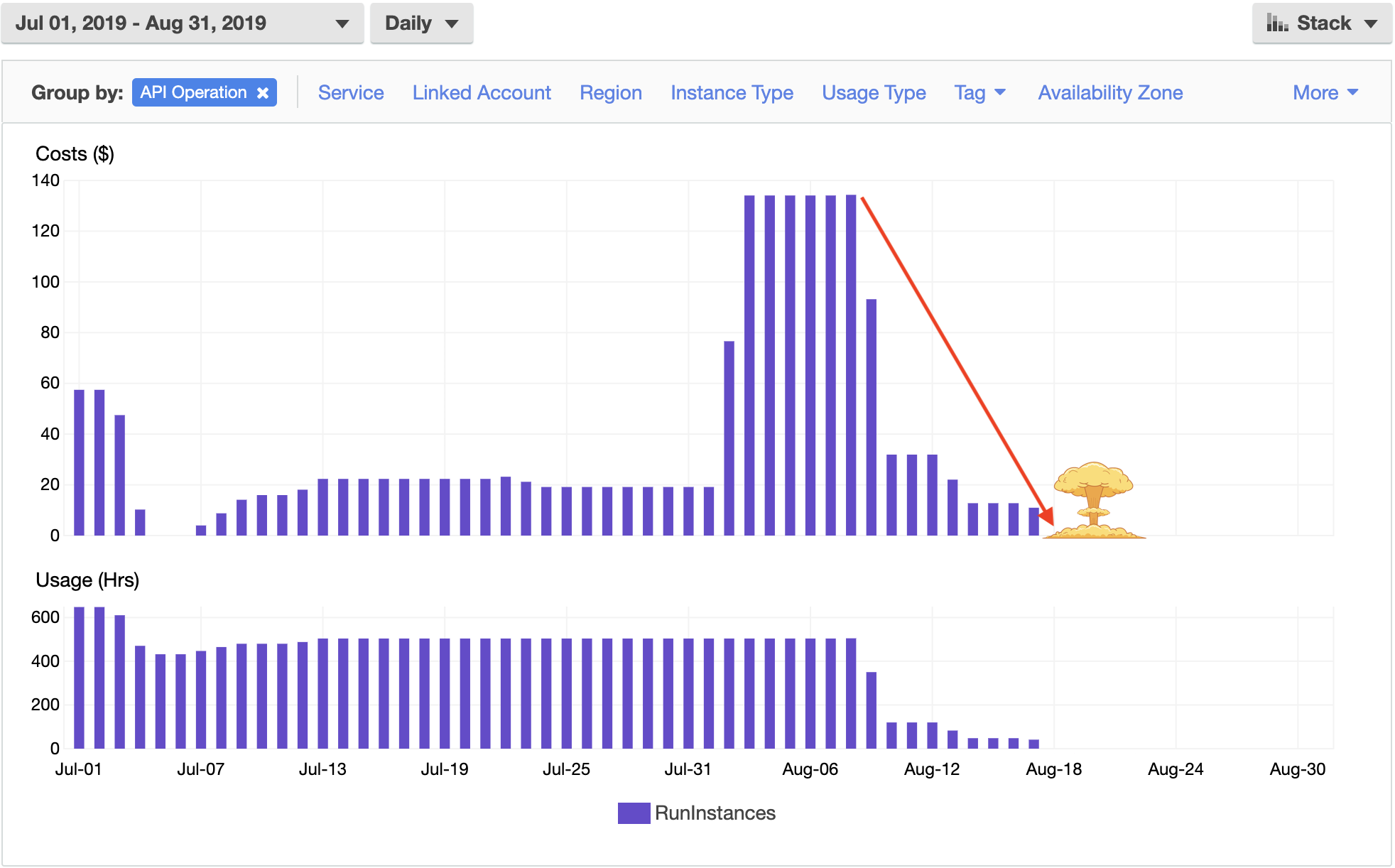

USE2-BoxUsage:r4.xlarge - $1,160.66 (+79%)

- These are memory-optimized instances that we never really needed but were stuck with due to a mistake made while reserving instances last year

- Nobody liked using these instances

- The 79% increase looks bad, but it’s actually good. Our reservations for these instances expired at the beginning of August, so we paid on-demand prices instead of reserved prices.

- Because the reservations expired, we were able to remove all of these unnecessary instances, and we’ll no longer have to pay this bill anymore

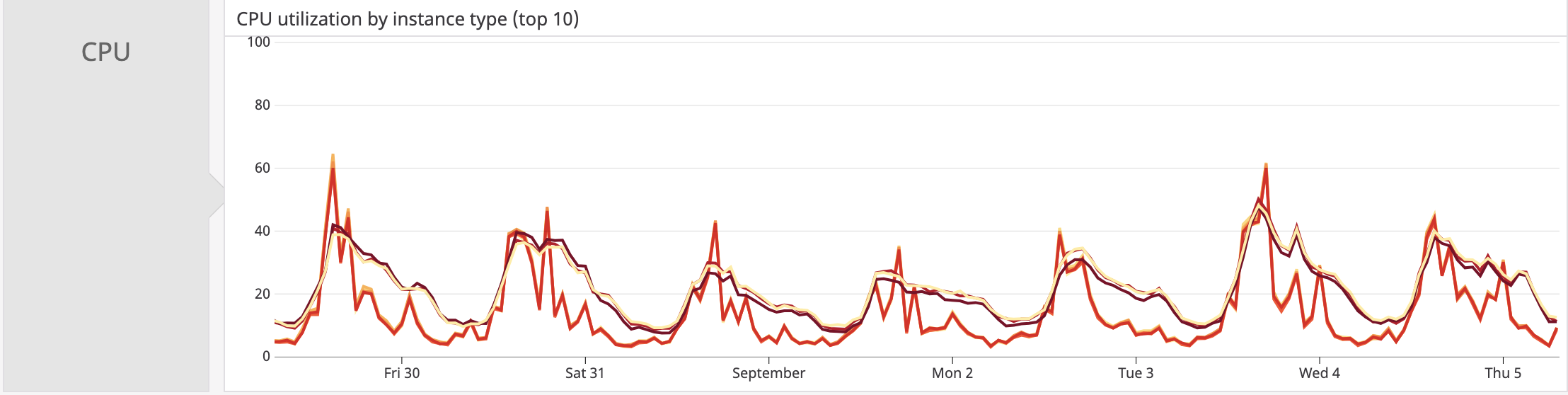

USE2-BoxUsage:t3.xlarge - $1,116.92 (+4%)

- These are in the same group as the t3.medium’s above. They are burstable instances used to handle traffic that comes in bursts.

- Instances in the t family have a baseline performance and a credit system. You get a certain amount of credit for staying under the baseline. When CPU use goes above the baseline, AWS decreases your credits. They refill again when CPU goes back under the baseline. If we go above the baseline when we’re out of credit, AWS will charge us more.

- For t3.xlarge’s we have to stay under the baseline of 40% CPU usage. Currently, all of our t3.xlarge instances idle between 15-25% CPU usage.

- We use these for GDPR tagging and for workers that perform very expensive tasks on the database throughout the day.

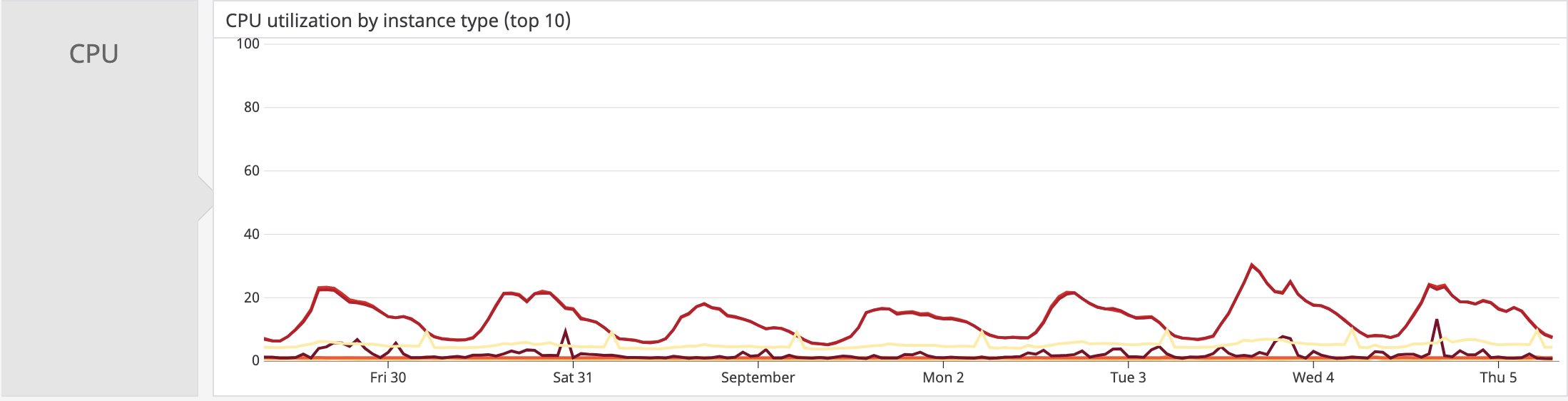

- Looking at our CPU usage on datadog, you can see the bursts of usage we experience in a given day.

- We might be underutilizing these instances. If we were to drop these workloads down one size, we’d see an increase in the amplitude of the CPU use. However, I’d expect us to still idle under the 30% baseline set by the next size down.

- We haven’t changed the number of these instances running in a couple of months. I expect this bill to either remain flat or decrease if we decide to resize the workloads to something smaller and more appropriate.

USE2-BoxUsage:c4.2xlarge - $682.39 (+89%)

- These are the old generation of c instances that we used to use for our web apps and a few workers.

- The 89% increase looks bad, but it’s actually good because our reservations for these instances expired at the beginning of August, so we paid on-demand prices instead of reserved prices.

- Because the reservations expired, we were able to upgrade all of these instances to the new, improved, and cheaper instance type the c5.2xlarge that I wrote about above.

- We’ve upgraded all of these instances so we’ll no longer have to pay this bill.

USE2-BoxUsage:i3.large - $613.41 (+5%)

- These are the staging Elasticsearch and Cassandra clusters

- They are smaller than the production ones because we don’t need that much power for our staging environment.

- This bill will increase because we have a new Elasticsearch cluster in staging used for the ELK stack.

- We can also expect this bill to increase as we try to increase parity between our staging and production environments

USE2-BoxUsage:t3.large - $490.30 (+22%)

- These are also in the t family and follow the same burst rules I outlined above.

- The baseline for t3.large instances is 30% CPU usage.

- We use these for a few different workers, the geolocator app, and other miscellaneous boxes.

- We look to be overprovisioned here as well. All of our workloads using these instances are well under the baseline and don’t appear to ever even burst above 30%.

- This isn’t a very expensive bill, but we can definitely optimize here to see some cost savings. I’d expect this bill to remain flat or to decrease in the future.

Final Thoughts on EC2

I mentioned above that there is a good amount of fat on this bill that we could trim in the future. After writing this, I’m finding that there is a lot of low hanging fruit that we can take advantage of to get some quick bill wins. Aside from those, you might have noticed that most of the services are named X-BoxUsage:X. That means that we’re paying on-demand prices. Once we’ve figured out the baseline resources we need to maintain a healthy application, we’ll be able to reserve more instances and see more billing wins.

Relational Database Service (RDS) - $19,020.12 (-27%)

Again, this is huge. According to Baremetrics, we have 25,451 customers and Adam Jones pointed out at the retreat that this is the first time that we’ve paid less than $1 per customer for our database. This is all coming from the time and effort investments we’ve made towards our data storage infrastructure.

Service breakdown

- USE2-HeavyUsage:db.r5.12xl - $4,949.68 (+254%)

- This is our MySQL master database.

- We have it reserved so we can expect to pay this amount every month for the next year.

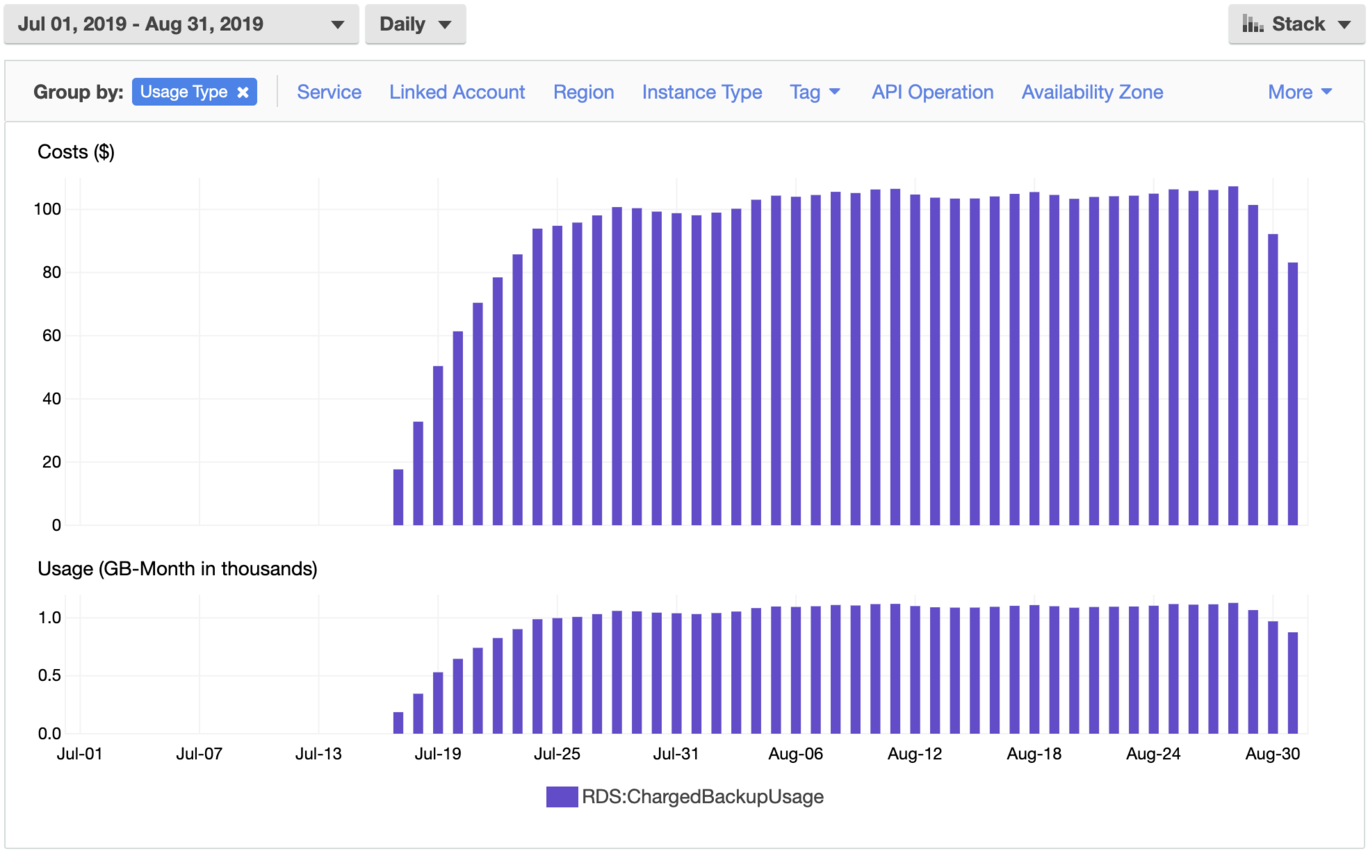

- RDS:ChargedBackupUsage - $3,195.10 (+270%)

- This is the cost of the backups we run for our disaster recovery account

- The daily cost of this is pretty flat, but we had some issues in the last days of August running the backups

- We can expect a small increase in this bill for September because we’ve fixed the problem that stopped our backups from working properly from August 28 - August 30.

- USE2-InstanceUsage:db.r4.8xlarge - $2,856.96 (+26%)

- This is one of our MySQL replicas.

- We have this on-demand right now because we aren’t sure if we’d need it for the rest of the year.

- As long as we keep this around, we can expect to pay about this much every month.

- We need to decide if we want to reserve it or get rid of it.

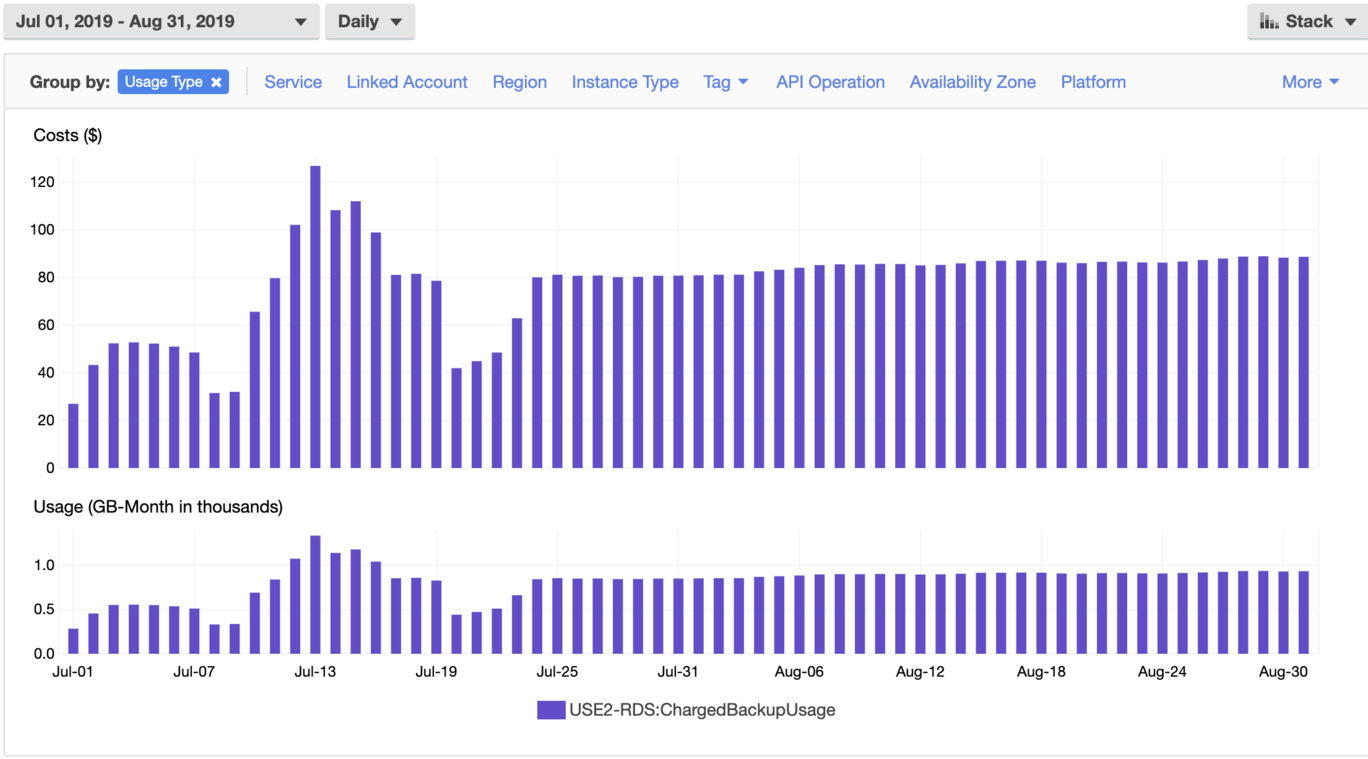

- USE2-RDS:ChargedBackupUsage - $2,660.57 (+22%)

- These are the normal, non-disaster-recovery backups

- These are run every day and increase with database storage usage. The bigger our database, the more we can expect these backups to cost.

- Our daily spend here has been increasing pretty slowly since July. I don’t expect it to increase much for the month of September

- USE2-HeavyUsage:db.r4.8xlarge - $1,649.89 (0%)

- This is the reserve price for one MySQL replica.

- We reserved this in July so we’ll continue to pay this much for the next year.

- USE2-RDS:Multi-AZ-GP2-Storage - $1,406.53 (+31%)

- This is the cost for us to have storage available for our database in multiple Availability Zones.

- Multiple Availability Zones are useful in case there is a service outage in a single Availability Zone in a Region

- Our database has 5400GB of storage capacity on GP2 drives. That storage space is available in three different Zones in our Region.

- We will see an increase in this bill in the upcoming months because we’ve added more storage early in September to accommodate our growth.

- USE2-RDS:GP2-Storage - $1,039.91 (-14%)

- We decreased our total storage in July when we finished the move off PIOPs and deleted our two biggest tables.

- We might have decreased it a little too much because we almost ran out of storage early in September.

- We can expect this bill to increase in September because we added more storage to accommodate our growth.

Final Thoughts on RDS

Our RDS bill is finally in a stable place. While it will increase slowly over time as we grow, it shouldn’t go up by thousands of dollars every month like it did in the past. Our application and infrastructure are very stable now, and the places that might be bottlenecks in the future do not include MySQL. The price we pay for RDS in September should be about what we pay every month for the foreseeable future. I’m happy that we’re finally in a place where our database spend is predictable. The stability of the application isn’t determined by the day-to-day health of our MySQL database.

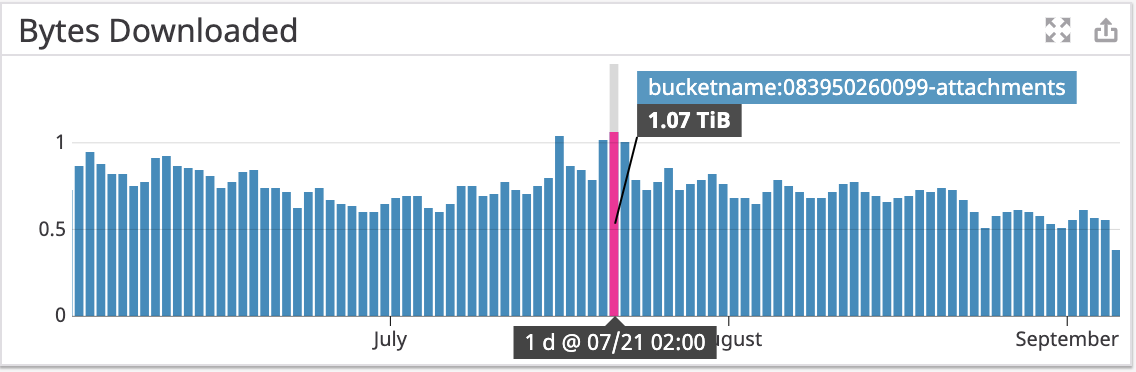

S3 - $11,107.75 (0%)

Service breakdown

- USE2-DataTransfer-Out-Bytes - $4,989.41 (+5%)

- This is the cost for us to download objects from S3 in the us-east-2 region.

- The main buckets to focus on here are our normal attachments bucket and our filekit attachments bucket.

- The normal attachments bucket has nearly 800GB of objects stored in it, and we can download up to 1TB of data from it per day

- This data costs money, so we need to put more efficient caching in front of this bucket as well as the buckets we’ve already implemented caching for.

- There is a noticeable trend showing a decrease in use from this bucket. That’s because more people are beginning to use the new email editor and those attachments are downloaded from the filekit attachments bucket.

- The filekit attachments bucket has 215GB of objects stored in it.

- I realized while writing this that we don’t have advanced monitoring turned on for that bucket, so I’m unsure of the download activity on a day-to-day basis. I have turned that on so we’ll have a good idea of activity by next month.

- This bill will go up as more people begin to use the new email editor.

- DataTransfer-Out-Bytes - $3,961.82 (-11%)

- This is the cost for us to download objects from our legacy attachments bucket.

- We implemented caching here a few months ago. Now we are beginning to see some success with our data transfer costs although it’s not quite as noticeable as I was hoping.

- It’s also likely that the decrease here is due to increased use of the new email editor. As more people begin to use that, we will see an increase in downloads from the filekit attachments bucket and a decrease from the legacy attachments bucket.

- USE2-TimedStorage-ByteHrs -$1,283.67 (-3%)

- This is the number of byte-hours that data was stored in standard storage

- We’ve decreased our retention policy for many of our buckets. However, we’ve fixed some of our backups, so we’ll likely pay about 7% more in the future.

- We could see some wins here as we change the storage type of some of our backups from standard to infrequent. Standard storage is meant for uploading and downloading objects frequently while infrequent storage is meant for uploading and sometimes downloading.

- Infrequent storage is cheaper to have a lot of data for a long time that we don’t access often. It is expensive if we were to download from it every day.

- Standard storage is more expensive to have data for a long time but is cheaper to download from every day.

Final Thoughts on S3

We’re fighting a data transfer battle here, but we are making progress. We are seeing that the techniques we’ve used in the past are paying off for our legacy buckets. If we implement those same strategies for our new buckets, we could see more billing wins. It costs money to send email attachments, and if someone with a 1,000,000 subscriber email list sends out a direct S3 link to a 1MB file, we’ll have to pay for 1TB of data transfer. Solving this problem is something that we’ll need to continue to work on as we continue to scale.

EC2-Other - $5,026.44 (+3%)

There are other costs associated with running ec2 instances. For us, that means data transfer out to the internet from behind our NAT Gateway, data transfer to other regions, and the cost of storage on the ec2 instances themselves. This bill will be optimized when we optimize our EC2-Instances bill.

Service breakdown

- USE2-NatGateway-Bytes - $1,473.04 (+31%)

- Most of our services are in a private Subnet behind a NAT Gateway. That means they don’t have direct access to the internet.

- Any time one of these services needs to communicate with the internet that data must flow through the NAT Gateway.

- This data includes making API calls to third-party services or even sending our logs to our logging provider.

- We’re currently sending about 1.1TB of data per day through our NAT Gateway.

- We send over 100GB of logs per day to our logging provider, and we’re trying to migrate away from them in the future and process our own logging infrastructure. We will see some billing wins there when that happens.

- USE2-DataTransfer-Regional-Bytes - $1,414.79 -15%)

- This is the cost of our applications to communicate between regions.

- A majority of this bill is currently coming from our disaster recovery backups which are responsible for replicating data between AWS accounts and regions

- We’ll have to pay more here when we add our secondary Cassandra cluster and data is synced between the two of them.

- We can view this bill as insurance for system outages and protection of our most important data.

- USE2-EBS:VolumeUsage.gp2 - $1,404.02 (-10%)

- This is the cost of having gp2 disks AKA hard drives

- We get charged for per Gigabyte per month. The more ec2 instances we have, the more disks we have, the more Gigabytes we’re using to store stuff.

- For now, this bill shouldn’t increase much. I don’t predict our ec2 instance count to increase very much, and we rarely need to attach additional volumes to our instances.

- Any instances that are likely to run into disk usage problems we’ve migrated to SSD

Final Thoughts on EC2-Other

Our costs here increased because we’re sending more data through our NAT Gateway. As we begin to move more services in-house, we won’t be paying as much for data transfer out to the internet. The costs here are also going to vary with the costs that we pay for the EC2 instances themselves. We can expect the amount we pay for EC2-Other to be proportional to the number of EC2 Instances we’re running in our entire fleet. As we continue to optimize our overall infrastructure and reduce the number of instances we’re running, we should see this cost decrease as well.

Support - $4,378.71 (-14%)

Support cost is based on how much our total bill is. The reason why support is down 14% while the whole bill is down 10% is because of the way AWS charges for business support. The first $10k gets charged at 10% and the following $10k-$80k gets charged at 7%. Our numbers look weird because we don’t pay for support across all of our AWS accounts. We only pay for support on the production and billing accounts.

Service breakdown

- 10% of monthly AWS usage for the first $0-$10K - $1,645.16 (-18%)

- 7% of monthly AWS usage from $10K-$80K - $2,733.55 (-10%)

Others - $5,885.88 (+7%)

Service breakdown

- CloudFront - $2,203.52 (+25%)

- This cost has gone up due to the removal of direct s3 links from the legacy email editor.

- We now use URLs that go through CloudFront to reduce cost on S3 data transfer.

- We have saved money overall with this change, but the amount is negligible to the point of being ~0%.

- I still would like to get all URLs behind a CloudFront CDN. Doing so should further reduce our S3 costs, and I’m optimistic that in the long run, this change will save us more significant amounts of money.

- EC2-ELB - $1,360.29 (-1%)

- This is the cost of the load balancers that sit in front of our web applications.

- The most significant contributor to this cost is the amount of data being transferred out from behind the load balancers.

- There isn’t much we can do to decrease this. It can be viewed as a success tax. The more customers visiting our applications, the more we’ll have to pay for the load balancing costs.

Final Thoughts on Others

“Others” is not the actual AWS service name; it’s just the sum of the rest of the services we use from AWS. CloudFront and EC2-ELB are the most expensive of those services by a large margin. I expect it to be this way for the foreseeable future. The next closest services only cost a few hundred dollars. CloudFront is currently the most concerning because it’s increasing pretty quickly, but at the same time it is saving us (a small amount) of money. If it becomes problematic, we’ll have to look at solving our CDN problems with a different solution.

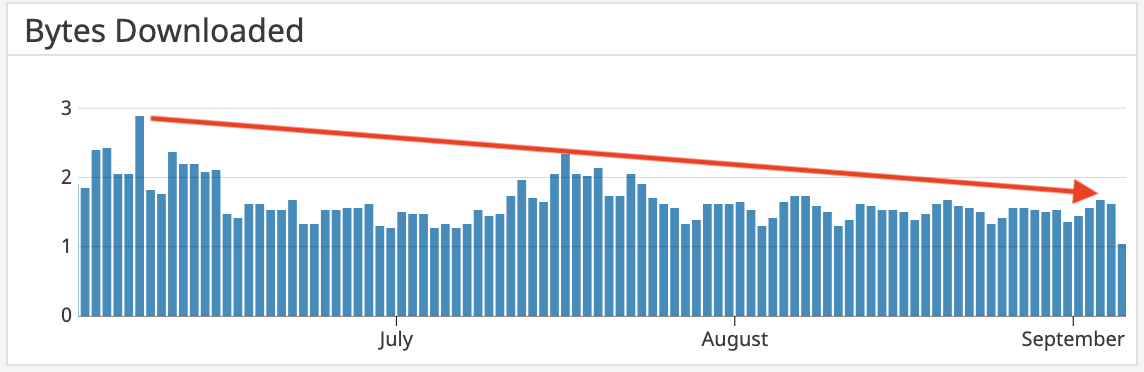

Conclusion

August was an exciting month! Our infrastructure is getting more stable by the day while also getting cheaper. This is a product of the entire engineering team putting in a tremendous amount of hard work to make an efficient, stable, and scalable product. We have a lot of room to improve, but we’re definitely headed in the right direction. Just take a look at the trendlines for our AWS bill in the year 2019.